Before the emergence of MCP (Model Context Protocol), AI application development, especially applications based on large language models (LLMs), mainly faced the following core issues:

Fragmented Interfaces

The invocation methods of different data sources (databases, APIs) and tools (such as Cursor, Cline) are not unified. Developers need to write adaptation code separately for each external resource, resulting in serious repeated development.

Functional Limitations

LLMs rely solely on pre-trained static knowledge and cannot obtain real-time external information (such as the latest news, user data) or directly call tools (such as IDEs). This leads to lagging responses and a lack of practicality.

High Development Costs

Each project requires starting from scratch to design interaction logic. Moreover, once external interfaces change, the entire system needs to be redeveloped and debugged, significantly increasing maintenance costs.

Ecological Fragmentation

Tools developed by different teams are incompatible with each other (for example, a knowledge base plugin from Company A cannot be integrated into Company B's system), forming "tool islands" and hindering technology sharing.

Security and Debugging Risks

Non-standardized communication protocols can pose security risks (such as unverified data invocation paths). The fragmented interaction methods also increase the difficulty of error troubleshooting.

These problems have led to low development efficiency and poor scalability of AI applications, limiting the practical implementation capabilities of large models. MCP was born to address these issues.

What is MCP?

MCP (Model Context Protocol) is an open protocol launched by Anthropic to solve the communication problems between large language models (LLMs) and external data sources and tools.

MCP Architecture

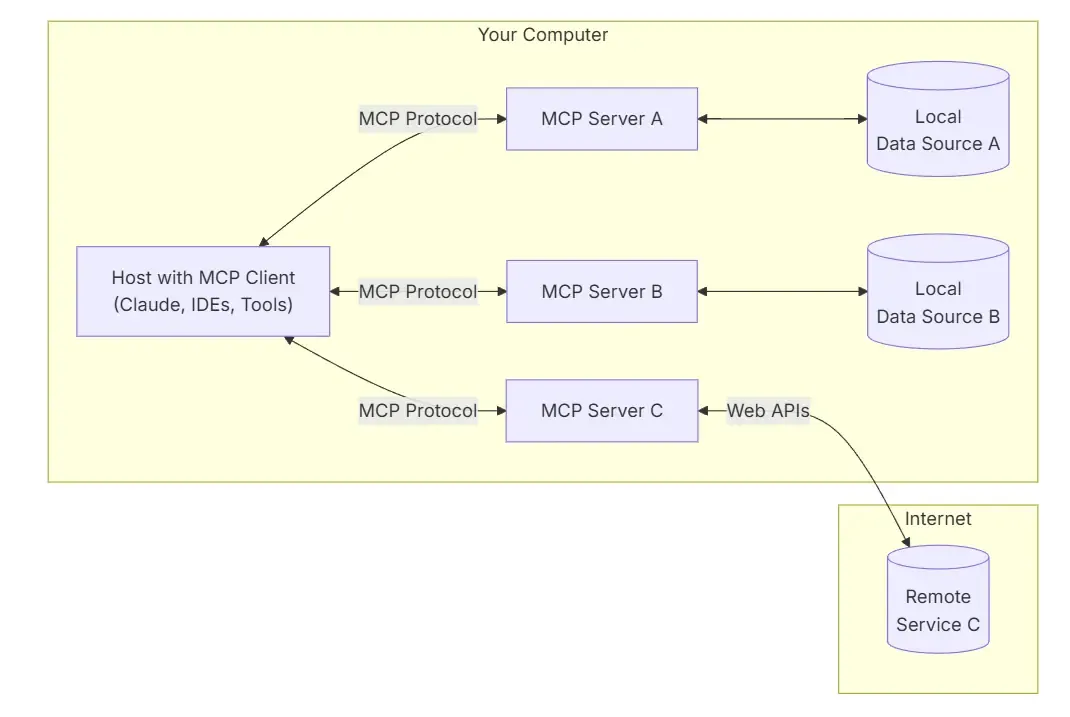

MCP adopts a client-server architecture, supporting bidirectional communication and a standardized JSON message format. Clients (such as Cursor or Claude) can call tools exposed by the server through the MCP protocol (such as the GitHub API or local databases) without having to develop separate adaptation interfaces for different services. For example, MCP allows Cursor to directly call the Slack API to read user requirements and automatically trigger the code generation process.

In the MCP architecture, one host application can connect to multiple services. Let's take a look at the architecture diagram:

As you can see, MCP contains multiple components, namely:

• MCP Host: Programs such as Claude Desktop, integrated development environments (Cursor), or AI tools that hope to access data through MCP. • MCP Client: A protocol client that maintains a one-to-one connection with the server. It receives user requests, converts them into a format that the AI model can understand, receives responses from the AI model, and returns them to the user. • MCP Server: A lightweight program that exposes specific functions through the standardized Model Context Protocol. It is responsible for receiving requests sent by the MCP Client, calling the corresponding data sources and tools according to the type of request, obtaining the required data, and returning the processing results to the MCP Client. • Local Data Sources: Files, databases, and services in a computer that the MCP Server can securely access. • Remote Services: External systems available via the Internet (such as through APIs) that the MCP Server can connect to.

Differences between MCP and Function Calling

If you are not familiar with Function Calling, you can refer to my previous article: Analysis of OpenAI Function Calling New Feature.

After reading the above introduction, you may wonder if MCP and Function Calling seem to be the same thing. Indeed, both are used to enhance the interaction capabilities of AI models with external data and tools. The differences between them are as follows:

| Comparison Item | MCP | Function Calling |

|---|---|---|

| Definition | A standardized protocol for communication between LLMs and external data sources and tools | The ability of an LLM to directly call pre-defined functions |

| Functions | 1. Context enhancement 2. Collaborative expansion 3. Security control |

1. Expand model functions 2. Implement the interaction between the model and external services |

| Interaction Method | Structured message passing based on the JSON-RPC 2.0 standard | The model generates function call requests, and the host executes and returns the results |

| Invocation Direction | Bidirectional; both the LLM and external tools can initiate requests | Unidirectional; initiated by the LLM through function call requests |

| Dependency Relationship | Independent of specific models and function calling mechanisms | Can be combined with protocols such as MCP, but itself does not depend on a specific protocol |

| Application Scenarios | 1. Intelligent Q&A 2. Integration of enterprise knowledge bases 3. Programming assistance |

1. Data query 2. Execution of specific tasks 3. Integration with external services |

| Example | Calling an MCP Server to fetch web page content | The model calls a weather query function to obtain real-time weather information |

Online Query of MCP Services

Currently, many MCP services have been contributed by the market community. The following are several websites where you can query MCP services online:

• https://glama.ai/mcp/servers

That's all for today. I'll write another article later to introduce the specific practices of MCP.